Share

Share

PatSeer’s AI Classifier achieves 95% accuracy on Gold Standard Datasets

The latest PatSeer release includes an AI Classifier that can automatically learn from your existing taxonomy and the records you have categorized against it and make predictions for newer, uncategorized records. The classifier supports hierarchical taxonomies and saves you the time and effort of manually classifying new records. The Classifier once enabled on your projects continues to learn in the background based on your feedback and multiple modes of operation allow you to choose the level of intervention you want in the process.

PatSeer’s AI classifier is built over the latest AI NLP stack and the underlying model has been customized with the semantic rules in ReleSense™ to achieve greater precision over patents and scientific literature.

Choosing a benchmark to evaluate the performance

In order to benchmark the AI Classifier’s performance, we decided to test its accuracy against two Gold Standard Datasets (Quantum Computing and Cannabinoid Edibles) that have been released as part of a collaboration between Aistemos Ltd and Patinformatics LLC and are available at https://github.com/swh/classification-gold-standard/tree/master/data.

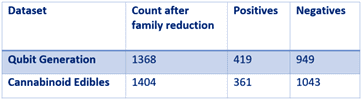

The two topics covered by the gold standard datasets are- Cannabinoid edibles and Qubit Generation for Quantum Computing.

- Cannabinoid edibles – The positive set of patents discuss food and beverages, pharmaceutical and cosmetic products that include cannabinoid substances. The negative set of patents includes food products that comprise a substance like a cannabinoid but not a cannabinoid itself.

- Qubit Generation for Quantum Computing – This dataset refers to patents that discuss the various means of generating qubits for use in a quantum mechanics-based computing system. Positive set of patents discuss types of qubits including superconducting loops, topological, quantum dot based and ion-trap methods. Negative set of patents include excluded technologies like applications, algorithms, and other auxiliary aspects of quantum computing that do not mention a hardware component and hardware for other quantum phenomena outside of qubit generation.

The original Qubit dataset (as released on GitHub) has multiple family members, and a family id (DOCDB family) has been provided. There are fairly equal number of Positive and Negative records when you factor each family member separately. However, since the testing methodology required randomly selected records to go into the training dataset, having their family members in the prediction dataset would skew the performance metrics of the AI classifier.

We, therefore, deduplicated the dataset to one member per family.

Post deduplication, the original datasets have more Negatives than positives implying that positive matching records averaged more family members than the negative records in the original dataset. It is also worth noting here that an unbalanced dataset can have a varying impact on common AI metrics such as Accuracy (defined below).

Testing Classifier performance on the gold standard dataset

Test Preparation

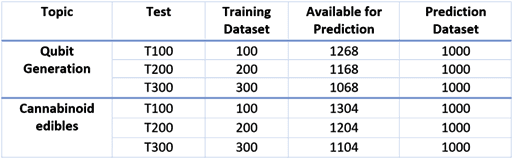

AI Classifiers’ accuracy varies with the size of the training data and usually larger curated training datasets lead to better prediction accuracy. We evaluated PatSeer’s AI classifier with three sizes of training datasets having 100, 200, and 300 records. In each, we kept an equal number of positive and negative classified records.

The performance of the classifier was evaluated by comparing the prediction against the Positive/Negative category defined in the gold standard and marking the predicted categories as TRUE POSITIVE (TP), TRUE NEGATIVE (TN), FALSE POSITIVE (FP) and FALSE NEGATIVE (FN) values. We chose Accuracy and F1 scores to benchmark the overall performance as both the metrics consider both precision and recall. The Accuracy and F1 values were calculated using the following formulae:

- Accuracy – Number of correctly classified data instances over the total number of data instances.

- F1-score – F1 score is the harmonic mean of precision and recall. It takes both false positives and false negatives into account.

The T100, T200, and T300 training datasets had a total of 100, 200, and 300 records respectively, and comprised an equal number of positive and negative marked records. The table below gives the dataset sizes used for training and prediction:

In order to have comparable results between the different training test sizes, the prediction dataset was restricted to a random set of 1000 records from the available prediction pool. The tests were run on a server that had an Intel Xeon 8-core server and a NVIDIA T4 Tensor Core GPU. In each run, the training time averaged 15 minutes and the inference time averaged 20 minutes.

The results

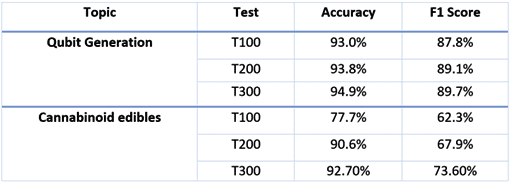

For the Qubit dataset, PatSeer’s AI Classifier performed well in all three tests whereas for the Cannabinoid dataset superior performance was achieved in the T200 and T300 dataset sizes.

The table below lists the Accuracy and F1 scores of PatSeer’s AI Classifier against each type of dataset:

Multiple iterations of the above tests yielded ~5% variation in the above metrics and one of the main factors that contributed to this variation was having varying counts of machine-translated records, or records without claims in the training dataset.

AI for classifying innovation

Most companies, actively maintain continuously updated datasets against the technologies of interest and categorized them against their internal taxonomies for Competitive intelligence and technology watch projects. Manual classification is subject to human errors and tends to vary when the subject-matter expert tasked with it changes and so the process is not 100% accurate.

PatSeer’s AI Classifier is designed to reduce the laborious and never-ending classification work that you need to keep doing in such datasets. As the results above show it offers near-manual levels of accuracy to build upon the existing taxonomies that have been curated manually by organizations.