Share

Share

Case Study: Auto-Categorization of Hand Gesture Recognition Patents Using PatSeer’s AI Classifier

Accurate and automated patent categorization is the need of the hour as it helps you to understand an industry’s IP landscape through your own lens. Manual categorization is time-consuming, laborious, and sometimes not feasible simply due to a lack of resources such as subject-matter experts. Although CPC and IPC classes are useful for categorizing patents, they are relatively broad and may not equate well with your own categories. Keeping these issues in mind, we designed PatSeer’s AI Classifier to eliminate the never-ending classification work that you need to do continuously for monitored technology areas.

Built over the latest AI & NLP stack, the underlying Classifier model has been customized with semantic rules in ReleSense™ to achieve greater precision over patent and scientific literature. The AI Classifier can automatically learn from your existing taxonomy and the records you have categorized against it to make predictions for newer, uncategorized records. It supports hierarchical taxonomies and saves you the time and effort of manually classifying new records. The Classifier once enabled on your projects continues to learn in the background based on your feedback. Multiple modes of operation allow you to choose the level of intervention you want in the process.

This case study evaluates PatSeer’s AI Classifier using a manually classified dataset on hand gesture recognition patents.

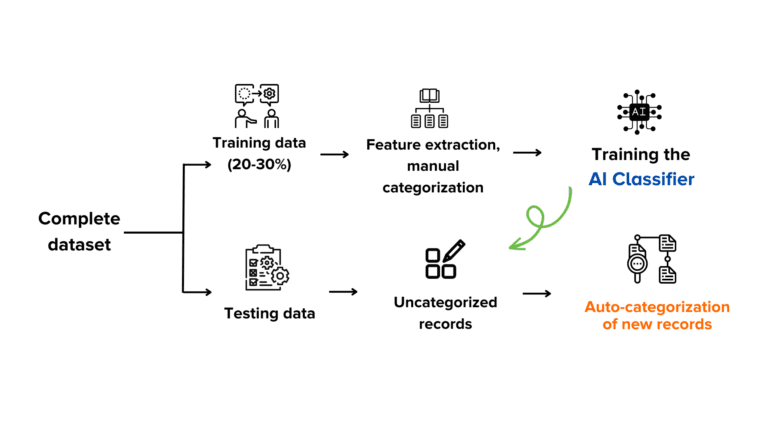

Dividing the dataset for training and testing purposes

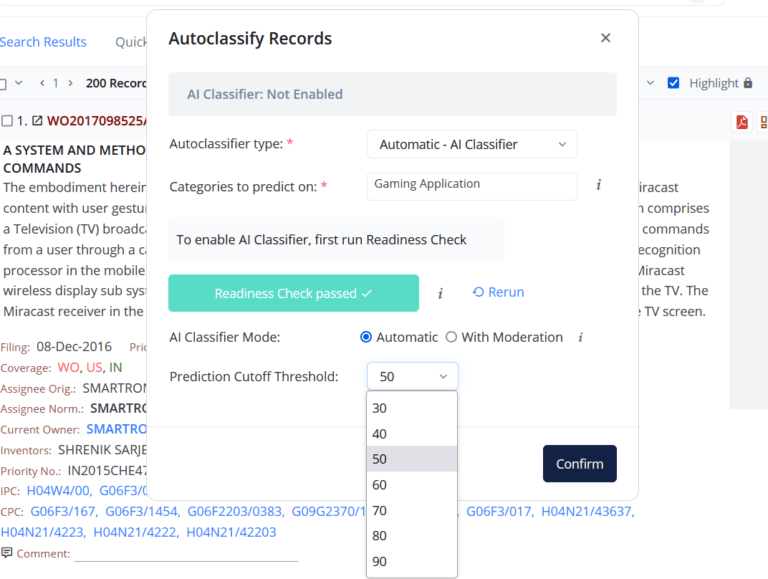

Since hand gesture recognition has a large number of applications, to evaluate the Classifier a subset of the matching patents viz. a total of 200 records were taken into consideration. For the training dataset, we manually categorized 60 records (15 for each category) into Gaming, TV, Sign Language, and Medical Applications. The AI Classifier built a prediction model over the training dataset and categorized the remaining 140 records. The prediction threshold (the value at which the decision is made whether to classify a patent under the selected categories or not) was set to 60 for our test purposes.

AI Classifier performance metrics

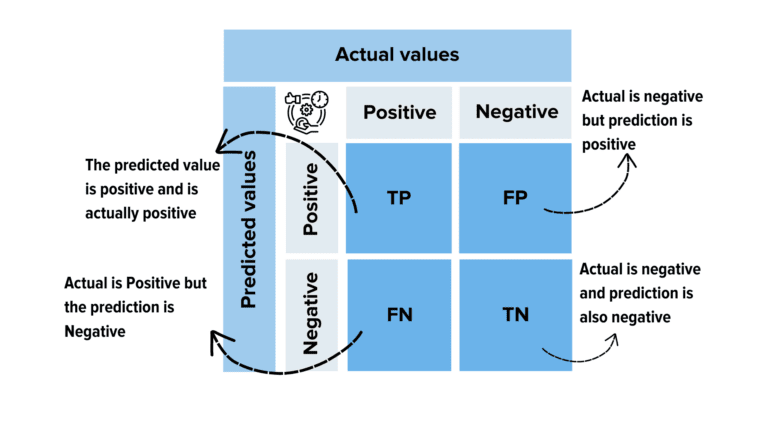

To evaluate the performance of the AI Classifier, we used the standard AI performance metrics scores by preparing a confusion matrix. A confusion matrix is a table with 4 different combinations of predicted and actual values:

- True positive: In this case, the observation or category is predicted positive and is actually positive.

- False positive: In this case, the observation is predicted positive and is actually negative.

- False negative: The predicted value is negative, but the actual value is positive.

- True negative: In this case, the model correctly predicts the negative.

For the confusion matrix to be created there needed to be a comparison between the predicted categories, i.e., the categories given by the AI Classifier and the actual categories, i.e., the manual categorization that was done by us. So, the TP, FP, TN and FN values were calculated for each of the patent numbers using the actual and the predicted values.

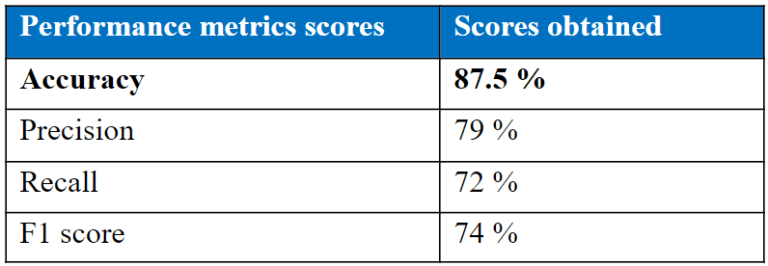

We considered 4 performance metrics scores:

- Accuracy: The ratio of correctly classified points (prediction) to the total number of predictions.

- Recall: The ability of a model to find all the relevant cases within a dataset.

- Precision: The ability of a classification model to identify only the relevant data points.

- F1 score: The harmonic mean of precision and recall. It takes both false positive and false negatives into account. Therefore, it performs well on an imbalanced dataset.

Results of the AI Classifier’s evaluation

All the data was put into Excel and using the industry standard formulas for the above 4 scores, we were able to obtain the performance results as shown below:

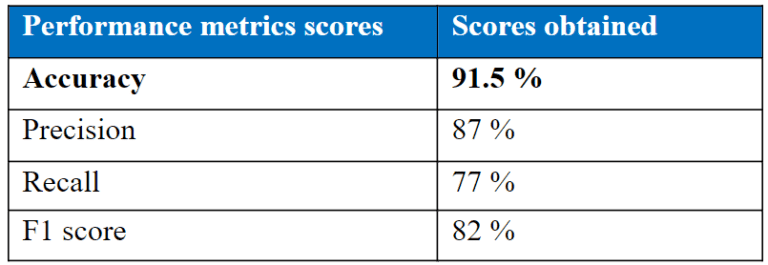

Detecting errors made in our manual categorization

In order to evaluate the reasons contributing to the accuracy score of 87.5% in depth, we rechecked records that didn’t have a true positive value. We came across a set of records that were tagged under Sign Language Interpretation by the classifier although the manual categorization did not put them under it. Upon cross-checking we concluded that the manual categorization was wrong and the records clearly belonged to the Sign Language category. So based on that, we updated the TP value. This resulted in the final performance scores as shown in the table below:

We noted that manual categorization is also prone to human errors and can vary based on the person reviewing the records. In our test case the AI Classifier predicted the correct category for the record and helped us identify the errors made during manual categorization. For best results, it is advisable to continuously update datasets for technology areas of interest for competitive intelligence projects.